As Google continues to refine its family of modern generative AI models, known collectively as Gemini, the company releases more advanced versions for free. The latest wide release is Gemini 2.0 Flash, Google’s fastest and most effective model yet.

Gemini 2.0 Flash Is Available to Free Users and Developers

Exiting triumphantly from its experimental phase is Gemini 2.0 Flash, a “highly efficient workhorse model,” as described by Google’s The Keyword. Known for low latency and strong performance, Gemini 2.0 Flash is now popping up in a few new places: on the Gemini app, the Google AI Studio and Vertex AI.

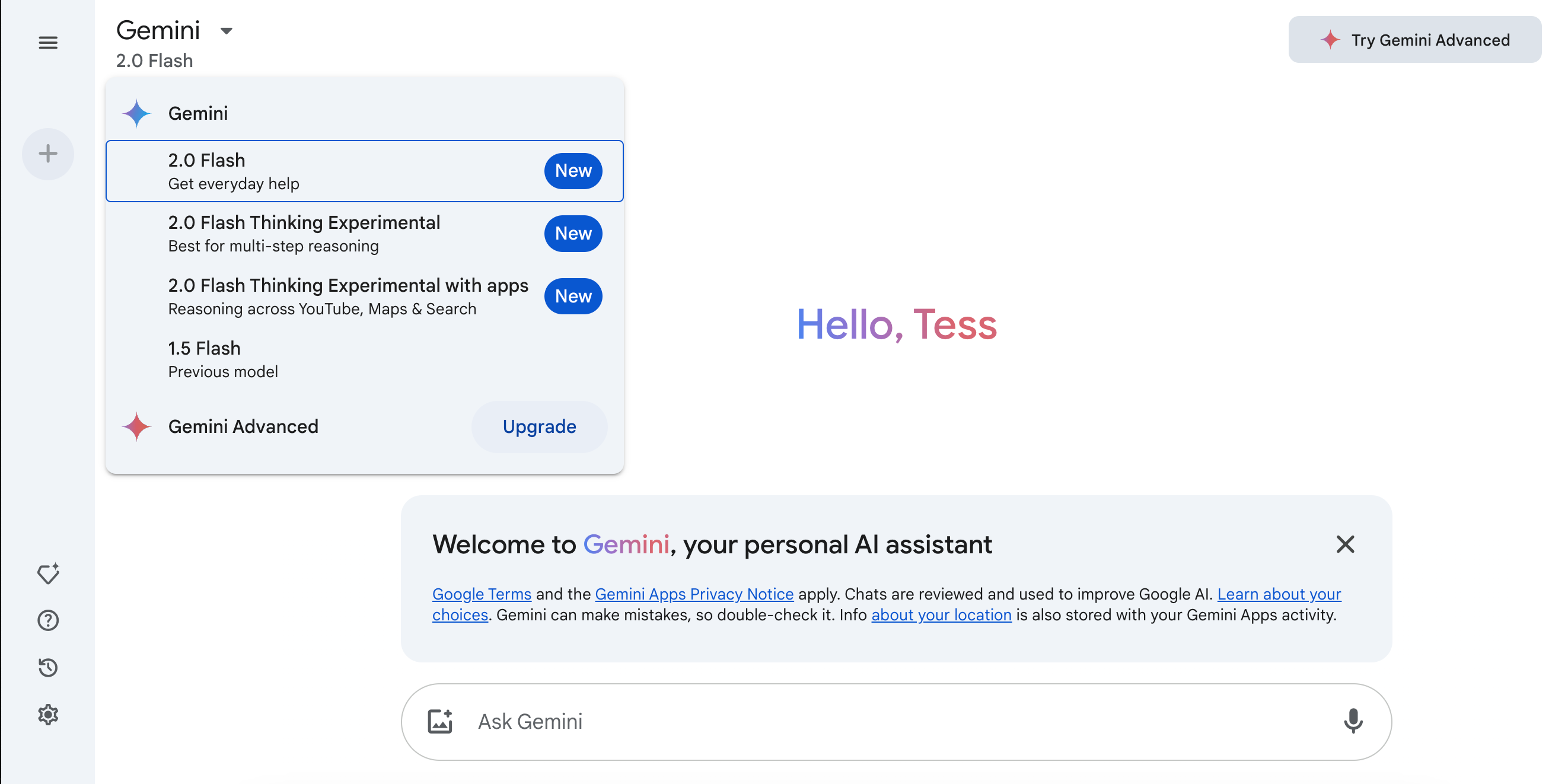

Gemini App

On January 30th, 2025, Google added the model to the Gemini app, available to both paying and non-paying users. You can simply go to Gemini’s web, desktop, or mobile app, and it will default to Gemini 2.0 Flash.

You do still have the option to select Gemini 1.5 Flash, Google’s previous model. I’m not sure why you would, but there could be something I’m missing as a basic user.

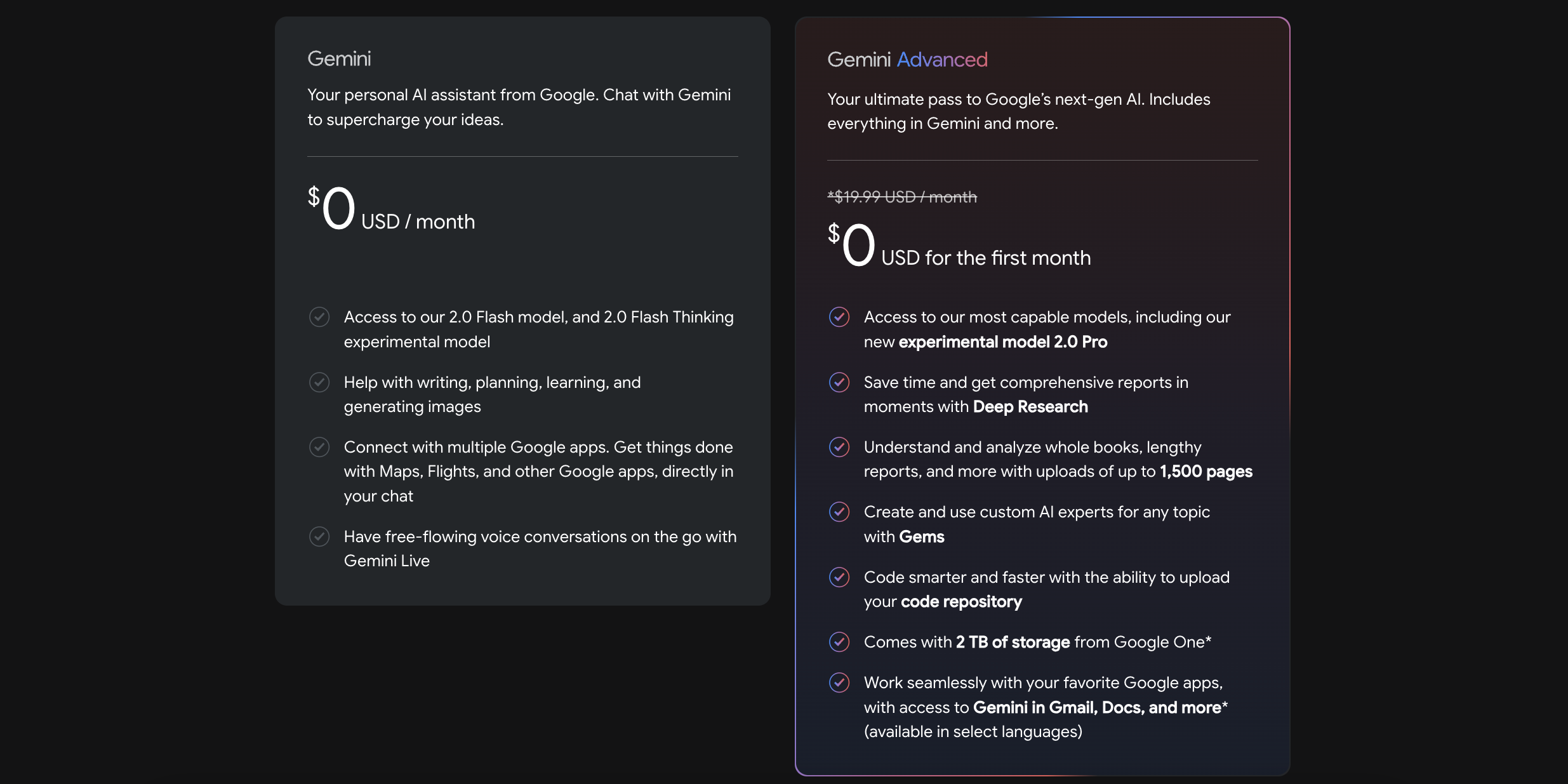

While you don’t need to pay for Gemini Advanced to use 2.0 Flash, a paid subscription will still get you extra perks. These premium offerings include access to more experimental models, the ability to upload lengthy documents for analysis, and more. You can check out the full list of benefits on Google’s Gemini Advanced page.

Google AI Studio and Vertex AI

In addition to bringing it to everyday users, Google has now made Gemini 2.0 Flash available to developers by adding it to the Google AI Studio and Vertex AI platforms. Developers can build applications using 2.0 Flash on both of these platforms, which cater to different use cases.

It’s All in the 2.0 Flash Family

While making Gemini 2.0 Flash more widely available, Google has also shared early versions of other models in the “2.0 family,” including Gemini 2.0 Pro and Gemini 2.0 Flash-Lite. Additionally, Google has dropped Gemini 2.0 Flash Thinking Experimental on the Gemini app after it was previously only accessible via Google AI Studio.

According to Google, these early models will start with text output and have additional modalities in the future:

“All of these models will feature multimodal input with text output on release, with more modalities ready for general availability in the coming months.”

If you, like me, find it challenging to keep up with the various iterations of Gemini models, here’s a quick breakdown of the newest releases:

|

Model |

Status |

Available On |

Best For |

|---|---|---|---|

|

Gemini 2.0 Flash |

Generally Available |

Gemini app (all users), Google AI Studio, Vertex AI |

Fast responses and stronger performance compared to 1.5. Useful for everyday tasks like brainstorming, learning, writing, as well as for developers to build applications. |

|

Gemini 2.0 Flash-Lite |

Public Preview |

Google AI Studio, Vertex AI |

Cost-effective yet updated option for developers. It has “better quality than 1.5 Flash, at the same speed and cost.” |

|

Gemini 2.0 Pro |

Experimental |

Gemini app (Advanced users), Google AI Studio, Vertex AI |

Google’s “best model yet for coding performance and complex prompts.” |

|

Gemini 2.0 Flash Thinking Experimental |

Experimental |

Gemini app (all users), Google AI Studio |

Combines “Flash’s speed with the ability to reason through more complex problems.” |

As someone who uses Gemini largely for writing support and occasional curiosity questions, I’m not sure if I’ll truly feel the difference between 1.5 Flash and the new-and-improved 2.0 Flash. I do imagine those who leverage Gemini for more complex tasks and building will reap the rewards of the low-latency model.

In any case, I’ll be eager to see how 2.0 Flash handles image generation, which is “coming soon” along with text-to-speech, according to Google.